Datasets & Evaluation Metrics

Voice-over: ../assets/audio/datasets.mp3

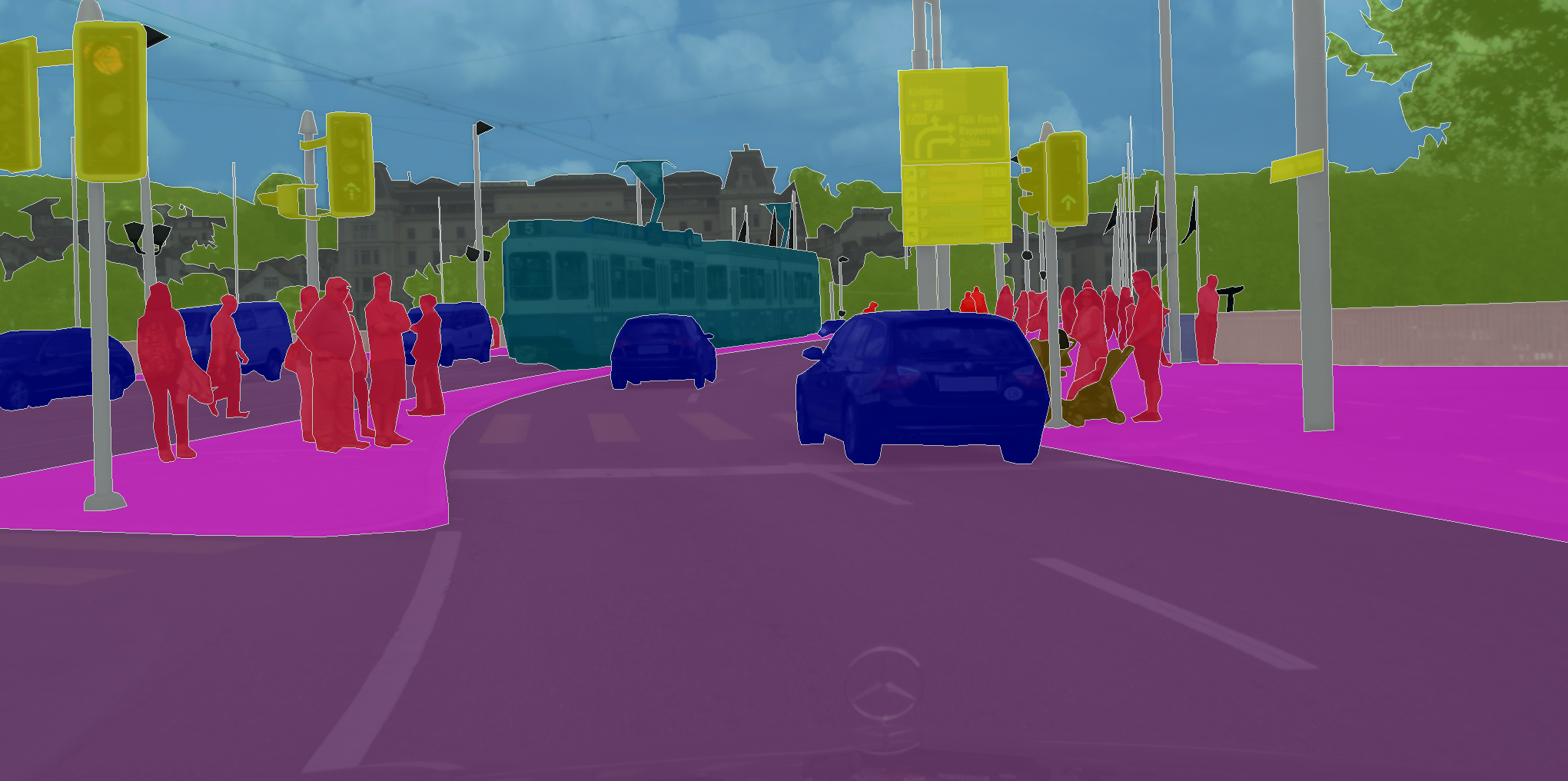

Crosswalk research often combines general-purpose driving datasets with smaller, task-specific sets. Large public datasets help with pretraining and benchmarking (e.g., city scenes, semantic labels), while many papers also curate their own crosswalk crops or masks for fine-tuning and evaluation [1] [7].

Commonly Used Datasets

| Dataset | Type | Why it’s useful |

|---|---|---|

| Cityscapes | Urban street scenes with pixel-level labels | High-quality segmentation ground truth for roads, sidewalks, people, vehicles, etc.; ideal for training/validating segmentation backbones. |

| KITTI | Driving scenes (detection, stereo, tracking) | Standard benchmarks for detector backbones and evaluation protocols. |

| Task-Specific Crosswalk Sets | Custom crops / masks from dashcams or maps | Many papers build small labeled sets of crosswalk patches or masks to fine-tune detectors/segmenters on the target task [1] [7]. |

Evaluation Metrics

- Detection: Precision, Recall, and mean Average Precision (mAP) at IoU thresholds (e.g., 0.5, 0.5:0.95).

- Segmentation: Intersection-over-Union (IoU) / mean IoU (mIoU), and pixel accuracy for crosswalk regions.

- Operational: Frames per second (FPS) / latency, memory/power—important for embedded deployment (e.g., Jetson) [7].

- Robustness: Breakdowns by day/night, weather, occlusion level, and city/device to show generalization.

.jpeg)

.jpeg)